Bit Depth part 1: 8-bit and 16-bit compared

A hidden workflow option

A retoucher who brings a photo from the raw processor into Photoshop, needs to make a small but important choice. A careless user may not be aware of this, and effectively let the software make the decision. Many professionals on the other hand seem to find it of essential importance to pick the right option. Nevertheless, neither Adobe’s raw processors – Camera Raw and Lightroom – nor Photoshop itself ask the user explicitly. They pick the setting that the user is supposed to have defined beforehand. And if that has not happened, the factory default is used.

I am referring to the so-called bit depth – the possible options for which are 8-bit and 16-bit. Go ahead, open a raw file, do any processing and open the image in Photoshop. (In ACR: “Open Image”, in Lightroom: “Edit in… Adobe Photoshop…”) There is no popup asking you whether you want further edits to take place in 8-bit or 16-bit. It’s a preference, set once and used thereafter.

The workflow

Forest in sunlight

Before I dive into the specifics of 8-bit and 16-bit, let me show you the workflow for choosing either.

In Lightroom:

- Go to the menu and choose Edit – Preferences

- Click the tab called External Editing

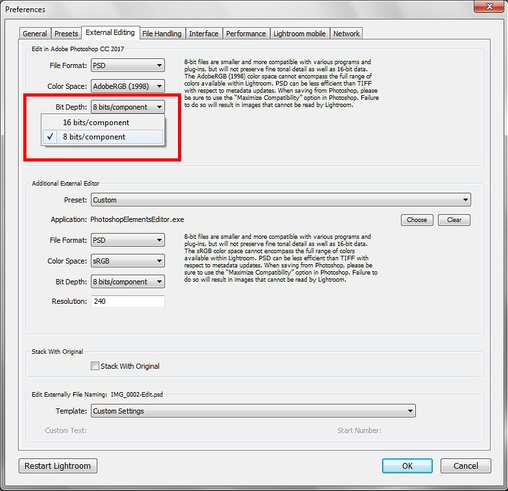

- The third field from the top is Bit Depth. See figure 1. Make your choice and hit OK

Figure 1. Workflow preferences in Lightroom

In Camera Raw:

- In the Camera Raw main popup, under the image is a string that shows the current settings

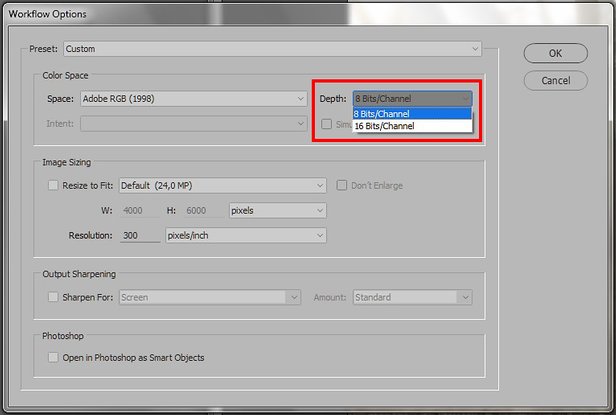

- Click that string and you’ll get a new popup called Workflow Options

- See figure 2. Make your choice and hit OK

Figure 2. Workflow preferences in Camera Raw

Also, one can explicitly change the bit depth during editing in Photoshop itself. Go to the menu and Image – Mode – X Bits/Channel. In that case, even a third option presents itself: 32-bit, which is out of scope for the current article. An obvious moment of conversion is at the very end of the process, if a JPEG output file is required: JPEG is 8-bit by definition. Yet, the unwary can happily save a 16-bit image as a JPEG because Photoshop, helpful as always, will do the conversion automatically.

In this article, I will explain what 8-bit and 16-bit mean, and how the extra 8 bits in the 16-bit way of coding are used. A next article will explore how bit depth impacts the editing process.

Byting the bits

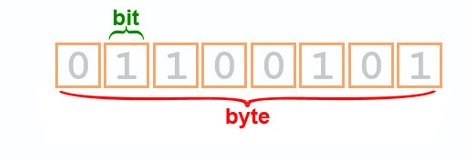

Let’s start with 8-bit. If you don’t know what 8-bit means, you first need to understand what one bit is. A bit is the smallest possible unit of information in computer memory. It can store two possible values: 0 or 1. Put two bits together and they can hold 4 possible values: 00, 01, 10 or 11. Three bits go up to 8 different values: 000, 001, 010, 011, 100, 101, 110 and 111. Every bit extra multiplies the number of possibilities by 2. By the time we have assembled 8 bits, 256 different values are possible. See figure 3 for an illustration.

Figure 3. Eight bits, one byte

Now for some reason a unit of 8 bits (usually called a byte) happens to be very common in computer architectures. So there we are, the slightly confusing but very standard concept in the world of digital imaging: 8-bit images.

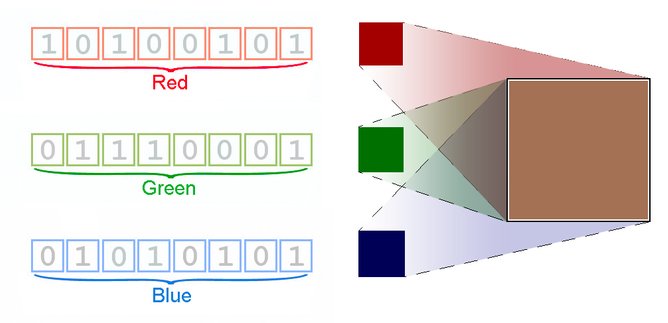

Confusing – because obviously, not the whole image is coded in just 8 bits (if it were, only 256 different images would be possible). Not even one pixel fits in 8 bits (if it would, only 256 different colors would be possible). No – 8 bits are reserved for each color component. In an 8-bit RGB image, each pixel can have 256 possible values for the red component, 256 for the green and 256 for the blue. That’s the story of 8-bit. Every pixel needs, well, 24 bits to store its color information. But never call this way of coding 24-bit or people will surely misunderstand you. It’s just always called 8-bit. Figure 4 shows how these three 8-bit entities make up one color.

Figure 4. 8-bit representation of a middle brown (RGB 165,113,85)

8-bit by the numbers

Now that we have that clear, let’s move on to the next question. Given this 8-bit schema, how many colors are possible? That’s simple arithmetic, assuming three components of RGB: 256 x 256 x 256, which is about 16.7 million colors. Every combination yields a different color, so we really can code 16.7 different colors in 8-bit RGB.

As an aside: what about CMYK and LAB?

- CMYK has four components, so in theory 256 * 256 * 256 * 256 colors are possible, over 4 billion of different combinations. But in reality, that amount isn’t achieved. The CMYK components do not use the full 256 possibilities that 8 bits can hold. Instead, only 0-100 are used. The theoretical range is 101 * 101 * 101 * 101, equalling more than 100 million colors. However, even that number is too high, since many different combinations yield the same color.

- LAB, like RGB, has three components, but only the A and B use the full range of 256 values. The L knows only 101. The theoretical maximum is 6.6 million of colors, but again this number is flattered because a good deal of these colors are non-existing.

For the sake of simplicity, let’s restrict ourselves to RGB in the remainder of this article.

Right, so in 8-bit RGB, 16.7 million possible colors exist. This may seem a phenomenal figure, but how big is it really? How many different colors can a human with good vision actually distinguish? Do a google search, and you will find figures that roughly vary between 1 million and 10 million. Also a lot, but still less than the 16.7 million colors of the 8-bit RGB. Eight bits of color coding are more than sufficient then, are they?

Well, it's not so simple.

16-bit by the numbers

The arithmetic for 16-bit is very similar to that of 8-bit, except for one surprise. One would assume it’s 16 bits per color component this time (we restrict ourselves to RGB again). But no: for some reason that I have not been able to find out, only 15 bits are used. This means, over 32,000 different values per R, G and B are possible, yielding 35 trillion possible colors. A stupid amount, right? A huge waste of memory and disk space! Or…?

Despite this enormous figure, many websites and Photoshop books tell you that working in 16-bit is better than working in 8-bit, for quality reasons. Let me try to analyze the difference between the two then.

The two scales

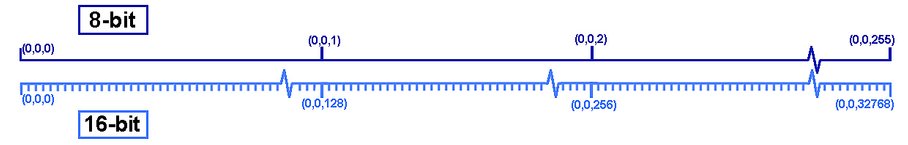

Comparing 8-bit and 16-bit, it’s 256 against 32,768 – so where does Photoshop put the surplus of 32,512 values that 16-bit gives us? The answer is best illustrated in a figure. For each step in 8-bit RGB, 128 steps are available in 16-bit RGB. See figure 5 which I hope makes my point clear.

Figure 5. The 8-bit and 16-bit scales.

The figure shows the granularity of 8-bit coding on the dark blue line. Note how far single steps seem to lie apart. The (light blue) 16-bit scale is much finer.

So what can we see here? The extra bits are used to fill up the spaces between two consecutive 8-bit values – there is nothing extra at the very ends. 16-bit coding does not give extra dynamic range. The lightest highlight in 8-bit is the same as it is in 16-bit, and similarly for the darkest shadow. This is an important notion, and often forgotten or neglected in explanations.

For those familiar with the properties of raw image files, this may be a disappointing fact. (It was for me when I first realized this.) In raw, more dynamic range is available than what can be made visible on a screen. That extra range is “hidden” in extra image bits and can be recovered by the raw processor. See figure 6 for an example. Both images were developed from one raw file, one with exposure -2, one with +2.

Figure 6. Two JPG's developed from one raw file

Notice the detail in each version that is practically invisible in the other. All information is there, captured in "extra bits" that a processed image, even in 16-bit, cannot provide. Right when we open an image in Photoshop proper, the dynamic range is fixed, limited, for 16-bit as well as for 8-bit coding. This is true no matter what the original raw file may have contained.

Which is best?

The question remains, which of the two should we use for editing, 8-bit or 16-bit? I put off that question to a future article. Don’t expect a definite answer though, I prefer to indicate advantages and disadvantages, and leave the actual choice to you.

Gerald Bakker, 22 Dec. 2016

Related articles

Photoshop by the Numbers